The 2026 Algo-Trading Stack: High-Frequency Architecture, Data Fidelity, and AI-Driven Execution

Executive Summary

The transition from 2025 to 2026 marks a watershed moment in the history of algorithmic cryptocurrency trading. The era of the "bedroom quant"—the solo developer running a monolithic Python script on a laptop—is effectively over, displaced by institutional-grade architectures that prioritize modularity, latency determinism, and artificial intelligence (AI) integration. The modern trading bot is no longer a script; it is a distributed system of microservices.

This report serves as a comprehensive technical guide for the Quantitative Developer tasked with building the "Ultimate Trading Stack" for this new epoch. It moves beyond basic API connectivity to explore the deep structural requirements of high-frequency trading (HFT) and regime-aware execution. We posit that the 2026 stack is defined by three converging forces:

- Hyper-Fidelity Data Ingestion: The shift from aggregated Level 2 (L2) snapshots to normalized Level 3 (L3) event streams, necessitating a battle for "atomic truth" among providers like CoinAPI and Kaiko.

- Context-Aware Intelligence: The integration of Large Language Models (LLMs) and Machine Learning (ML) not for price prediction, but for "Regime Detection," utilizing signals from analytics platforms like Sentora (formerly IntoTheBlock) and Nansen.

- Resilient Execution Gateways: The implementation of latency-aware routing logic that navigates the distinct rate-limit architectures of venues like Coinbase and Kraken.

We will deconstruct these pillars, offering a comparative analysis of data providers, a forensic examination of exchange APIs, and a blueprint for a secure, AI-augmented trading infrastructure. This is the architecture of alpha in 2026.

Affiliate Disclosure

This article contains affiliate links. We may earn a commission when you sign up through our partner links at no extra cost to you. This supports our free educational content. See our full disclosure policy.

Part I: The Feed – The War for Market Data Fidelity

In quantitative finance, data is the raw material of alpha. The quality of this material determines the theoretical ceiling of a strategy's performance. "Garbage in, garbage out" is a truism, but in HFT, the nuance is "Latency in, arbitrage loss out." As we evaluate the landscape for 2026, the market for crypto data has stratified into distinct tiers, each serving a specific architectural function. The "Data Source Battle" is not about finding a single winner, but about selecting the right tool for the specific layer of the stack: Execution, Risk, or Discovery.

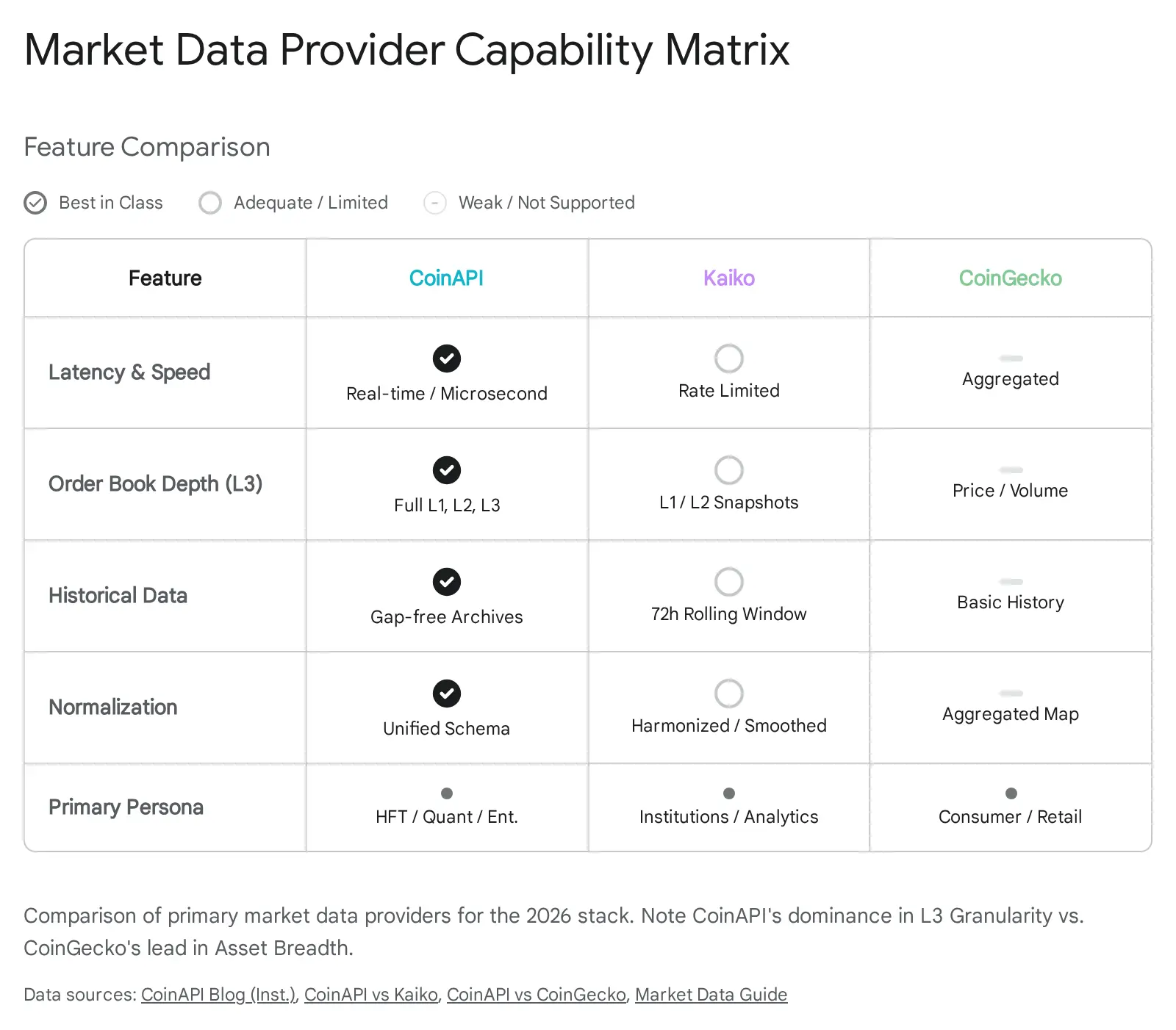

We analyze the three dominant incumbents—CoinAPI, Kaiko, and CoinGecko—across the dimensions of granularity, latency, and historical integrity.

1.1 The Granularity Spectrum: Moving Beyond L2

To understand the comparative value of these providers, one must first define the fidelity levels of market data. The 2026 stack demands a move beyond standard aggregations.

- Level 1 (L1) - Top of Book: This consists solely of the Best Bid and Best Offer (BBO). It is computationally cheap but strategically shallow. It tells you the price, but nothing about the conviction behind it.

- Level 2 (L2) - Aggregated Depth: This is the industry standard, providing price tiers and the total volume at each tier (e.g., 50 BTC available at $100,000). While sufficient for retail algorithms, L2 data obscures the "texture" of liquidity. It cannot distinguish between one large institutional order and a thousand small retail orders.

- Level 3 (L3) - The Atomic Stream: L3 data broadcasts every single message processed by the matching engine: order submission, modification, cancellation, and execution. It allows the reconstruction of the order book state with perfect fidelity.

CoinAPI: The L3 Microstructure Specialist

For the Quant Developer building an HFT bot, CoinAPI stands as the critical infrastructure layer for the "Hot Path"—the code responsible for generating execution triggers Its primary competitive advantage lies in its handling of L3 data.

Strategies that rely on Queue Position Estimation—the ability to model where a specific order sits in the execution priority queue—require L3 data. If a bot places a limit order at the best bid, it joins a queue. Knowing how many orders are ahead of it, and watching them cancel or fill in real-time, allows the bot to calculate the probability of a fill CoinAPI normalizes these raw L3 event streams across hundreds of exchanges into a unified schema

This normalization is a massive engineering feat. Each exchange emits L3 data differently—Coinbase might use a specific sequence ID format, while Kraken uses another. CoinAPI abstracts this complexity, presenting the quant with a standardized "GPS feed" of the market This contrasts sharply with aggregated feeds, which provide merely a "map" of where the market was, rather than a real-time stream of where it is going.

Kaiko: The L2 Analytics & Smoothing Engine

Kaiko occupies a different strategic niche: Risk Management and Smart Order Routing (SOR). Their architectural philosophy emphasizes "normalization + smoothing"

For a high-frequency strategy, "smoothing" is synonymous with "latency." It implies a processing delay where the provider filters out noise to present a coherent price. However, for HFT, the alpha is often found in the noise—in the micro-dislocations and crossed markets that smoothed feeds eliminate.

Where Kaiko excels is in the "Warm Path" of the stack. An institutional trading desk requires standardized L1/L2 snapshots to calculate Value-at-Risk (VaR) or to value a portfolio for compliance purposes. Kaiko’s data includes pre-computed metrics such as "Market Depth at ±1%" and "Slippage," which are invaluable for calibrating the sizing of orders For a developer building a rebalancing bot rather than a scalper, Kaiko’s clean, harmonized feed reduces the engineering overhead of dealing with raw order book anomalies.

CoinGecko: The Discovery Engine

CoinGecko is fundamentally a consumer-facing aggregator It prioritizes breadth over depth. While CoinAPI and Kaiko might cover the top 100-300 exchanges with high fidelity, CoinGecko tracks thousands of venues and tokens, including long-tail assets on decentralized exchanges (DEXs).

For an HFT bot, CoinGecko is useless as a trigger for trade execution due to its high latency and lack of order book detail. However, it is essential for Universe Selection (the "Cold Path"). The 2026 stack utilizes CoinGecko to scan the entire crypto universe periodically (e.g., hourly) to identify new tokens gaining volume traction This output acts as a dynamic filter, updating the "Watchlist" of assets that the bot then subscribes to via the high-fidelity CoinAPI stream.

Data Provider Comparison: Choosing Your Stack Layer

| Provider | Data Level | Latency | Best Use Case | Exchange Coverage | Pricing Tier |

|---|---|---|---|---|---|

| CoinAPI | L3 (Atomic Stream) | Ultra-low (<10ms) | |||

| HFT Execution, Queue Position | 300+ CEX | Enterprise | |||

| Kaiko | L1/L2 (Normalized) | Low (~50ms) | Risk Management, SOR | 100+ CEX/DEX | Professional |

| CoinGecko | Aggregated | High (minutes) | Universe Selection, Discovery | 1000+ CEX/DEX | Free/Pro |

Stack Architecture Recommendation:

- Hot Path (Execution): CoinAPI L3 → Your HFT Bot

- Warm Path (Risk/Analytics): Kaiko L2 → Portfolio Valuation

- Cold Path (Discovery): CoinGecko → Watchlist Updates

1.2 Latency Architectures and Timestamp Transparency

In the "Hot Path," milliseconds define the difference between a profitable arbitrage and a "toxic" fill. The architecture of the data provider determines the "Time-to-Wire"—the elapsed time between an event occurring on the exchange and the data packet leaving the provider's server.

CoinAPI's Direct Stream Architecture:

CoinAPI utilizes WebSocket DS (Direct Stream) and Exchange Link technologies to minimize internal hops A critical feature for quants is the transparency of Dual Timestamps The API provides both:

- time_exchange: The timestamp generated by the exchange's matching engine.

- time_coinapi: The timestamp when CoinAPI received the message.

This delta allows the developer to calculate "Network Jitter" and model the propagation delay explicitly. If the delta exceeds a certain threshold (e.g., 50ms), the bot can be programmed to treat the data as "stale" and widen its quoting spreads to protect against adverse selection

Kaiko's Snapshot-Based Streams:

Kaiko’s streaming infrastructure is built on a "Snapshot + Delta" model When a client subscribes, they receive a full order book snapshot, followed by incremental updates. While efficient for bandwidth, this introduces a vulnerability: if a packet is dropped, the client state becomes corrupted, necessitating a new snapshot request. During periods of extreme volatility, this request-response cycle can induce latency spikes exactly when speed is most critical. Furthermore, Kaiko enforces stricter rate limits on its standard streaming tiers (e.g., 3,000 subscriptions per minute), which can bottleneck strategies attempting to monitor thousands of pairs simultaneously

1.3 Historical Data: The Backtesting Paradox

A fundamental requirement for the 2026 stack is the unification of the Backtesting and Live Trading pipelines. A common failure mode in algo-trading is "Implementation Shortfall," often caused by discrepancies between the data used to train a model and the data encountered in production.

CoinAPI's Flat Files:

CoinAPI solves this by offering Flat Files (CSV/Parquet via S3) that mirror their real-time schema exactly This allows developers to use the same parsing logic for both historical simulation and live execution. Moreover, their L3 Replay capability allows a developer to stream historical data over a WebSocket as if it were live This is a powerful feature for "Infrastructure Backtesting"—validating not just the trading strategy, but the resilience of the networking code and message handlers under high-load conditions.

Kaiko's Analytical Archives:

Kaiko provides deep historical archives of trade and aggregated quote data, which are excellent for generating "Fair Value" curves or researching liquidity trends over long horizons However, their focus is on providing "clean" history rather than the raw, replayable L3 event stream required to simulate HFT microstructure.

1.4 Synthesis: The Hybrid Data Strategy

The optimal "2026 Stack" does not rely on a single provider. It employs a Hierarchical Data Architecture:

- Hot Path (Execution Trigger): CoinAPI. Subscribed via WebSocket DS to the specific subset of assets currently being traded. Provides the atomic L3 truth needed for immediate decision-making.

- Warm Path (Risk & Context): Kaiko. Used to calculate portfolio-wide metrics, volatility indices, and liquidity scores. Its "smoothed" nature is a feature here, preventing outlier ticks from triggering false risk alarms.

- Cold Path (Discovery): CoinGecko. Polled periodically to scan the broader market and update the "Universe" of tradable assets.

---

Part II: The Venue – Execution Mechanics and API Engineering

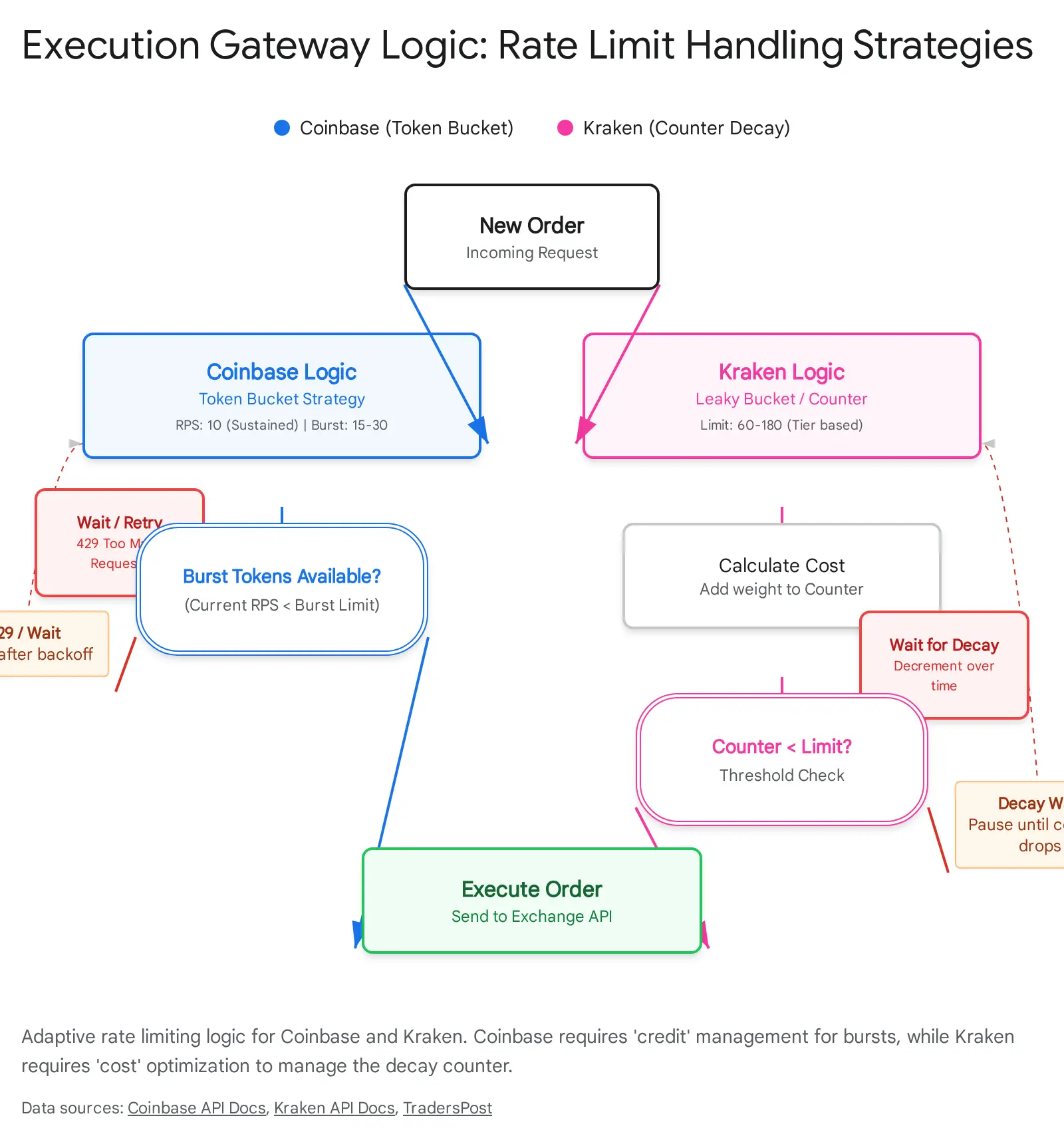

Once the signal is generated, it must be translated into an order. In the fragmented crypto landscape, the exchange is not just a destination; it is an adversary. Rate limits, matching engine quirks, and API downtimes are the environmental hazards a bot must navigate. We examine the two primary centralized gateways for the 2026 stack: Coinbase (Advanced Trade) and Kraken.

2.1 Coinbase Advanced Trade API: The "Burst" Philosophy

Affiliate link. See our methodology.

Coinbase has deprecated its legacy Pro API in favor of the Advanced Trade API, a unified platform for spot and derivatives. Its rate-limiting architecture is distinct and dictates a specific style of trading.

Rate Limit Architecture: The Token Bucket

Coinbase employs a Requests Per Second (RPS) model with a specific "Burst" allowance

- REST API: Standard limit of 10 RPS, with bursts up to 15 RPS.

- Private Endpoints (Order Entry): 15 RPS, with bursts up to 30 RPS.

- WebSocket: Public feeds are capped at 750 connections/sec/IP, but authenticated messages (order placement via socket) are limited to 8 messages/sec

Strategic Implication: The "Burst" capacity is a double-edged sword. It allows a bot to "sprint"—firing a rapid ladder of orders to sweep a book or capture a breakout. However, once the burst tokens are depleted, the API returns a hard HTTP 429 Too Many Requests error. This necessitates a Token Bucket implementation in the bot's code: the system must track its own consumption and "save" tokens for volatility spikes, rather than running at a constant maximum velocity.

Developer Experience: Handling the SDK

The official coinbase-advanced-py SDK is the standard interface, but it presents specific challenges

- Weak Typing: Responses often contain nested dictionaries that do not map cleanly to Python objects. A developer must access fields using bracket notation (e.g., account['available_balance']['value']) rather than dot notation. This breaks IDE autocompletion and static type checking, increasing the risk of runtime errors.

- Defensive Coding: To mitigate this, the 2026 stack wraps the SDK responses in Pydantic models. This adds a serialization overhead but ensures strict type validation before the data enters the trading logic.

- Rate Limit Headers: A critical feature of the SDK is the ratelimit_headers=True option This injects the real-time status of the rate limit budget (e.g., "Remaining: 14") directly into the API response headers. A robust bot reads this header after _every request to dynamically adjust its throttle, creating a "Latency-Aware Rate Limiter."

2.2 Kraken API: The "Efficiency" Philosophy

Affiliate link. See our methodology.

Kraken has long been a favorite of serious quants due to its robust engineering and stability. With the introduction of WebSockets V2, it has further solidified its position for high-frequency market making.

Rate Limit Architecture: The Leaky Bucket

Kraken utilizes a Counter-Based system that functions like a "Leaky Bucket"

- The Cost: Every API action has a cost. Placing an order might cost 0 points (to encourage liquidity provision) or 1 point. Canceling an order might cost 1 point.

- The Counter: Each user has a counter (e.g., max 125 points for Intermediate tier).

- The Decay: The counter reduces (decays) over time (e.g., -0 points/second).

- The Penalty: If the counter hits the maximum, the user is locked out until it decays.

Strategic Implication: This model heavily penalizes "spammy" behavior such as "Quote Stuffing" (rapidly placing and canceling orders). Conversely, it rewards Market Making. If a bot's orders are filled (trades executed), Kraken typically offers a reduction in the counter penalty. This incentivizes the developer to optimize the Order-to-Trade Ratio. A bot on Kraken must be precise; it cannot afford to be noisy.

WebSockets V2 and Async Python

The python-kraken-sdk (an unofficial but highly recommended library) fully supports the V2 WebSockets API

- Normalized Payloads: V2 offers a significantly cleaner JSON structure compared to the legacy V1, reducing the CPU cycles needed for parsing.

- Async Native: The SDK exposes SpotAsyncClient. For the 2026 stack, Asynchronous I/O (asyncio) is mandatory. Synchronous code blocks the execution thread while waiting for a network response. An async architecture allows the bot to maintain hundreds of open WebSocket subscriptions and manage orders across multiple pairs concurrently on a single core

2.3 Verdict: Venue Selection by Strategy

- For Market Making & Grid Strategies: Kraken is the superior venue. The decay-based rate limit rewards the patience of resting orders, and the V2 Websocket API is optimized for the high-concurrency monitoring required to maintain a dense grid of quotes.

- For Momentum & Taker Strategies: Coinbase is preferable. The "Burst" capacity allows the bot to "pay up" for speed when an opportunity arises, executing a rapid sequence of fills to capture a fleeting price dislocation before the market adjusts.

---

Part III: The Brain – AI Integration and The Algo-Loop

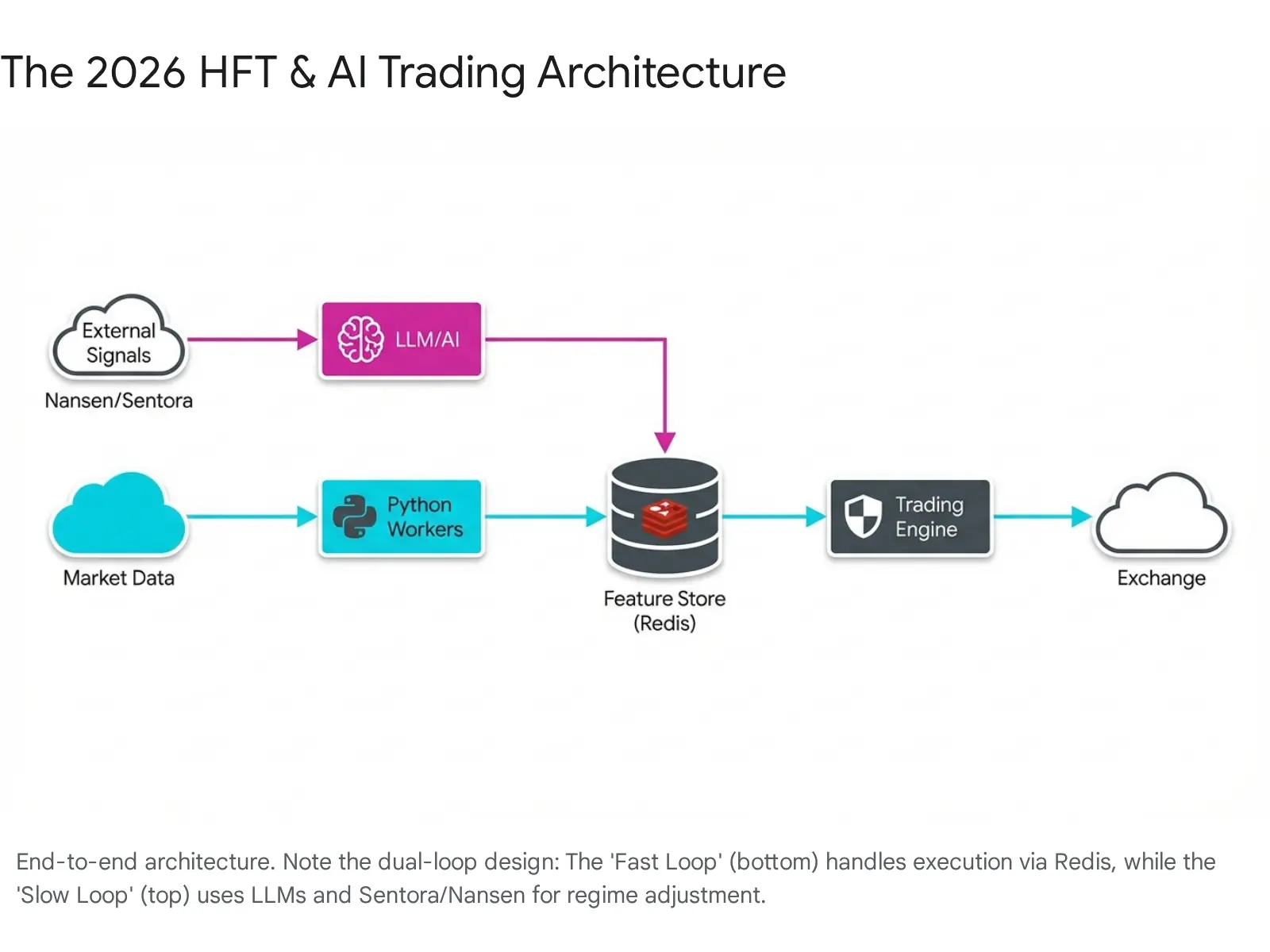

The defining characteristic of the 2026 stack is the integration of Generative AI and Machine Learning (ML). However, a naïve implementation—sending market prices to an LLM and asking for a prediction—is a recipe for failure. LLMs are slow, non-deterministic, and prone to hallucination. They cannot function in the millisecond loop of an HFT bot.

Instead, the 2026 stack uses AI for Regime Detection and Context Awareness. The AI does not pull the trigger; it decides which gun the bot should use. Is the market in a "High Volatility / News Driven" regime? Or a "Low Volatility / Mean Reverting" regime? The AI answers this question, and the HFT bot adjusts its parameters accordingly.

3.1 The Architecture: The Real-Time Feature Store

To bridge the gap between the millisecond world of HFT and the second-scale world of AI, we utilize a Feature Store Architecture.

The Feature Store (Redis + Feast):

We employ Redis as the high-performance "hot storage" layer, managed by Feast (Feature Store)

- Ingestion (The Fast Loop): Python workers consume the L3 feeds from CoinAPI. They calculate real-time features (e.g., 1-minute VWAP, Order Book Imbalance, Spread Width) and write them to Redis using Feast online stores. This happens in microseconds.

- Inference (The Slow Loop): Every 5-15 minutes, a separate AI Agent process wakes up. It reads the aggregated features from Redis, effectively "seeing" the recent market history.

- The Context Handshake: The AI Agent queries external intelligence providers (Sentora, Nansen), synthesizes a "Regime Score," and writes it back to Redis.

- Execution: The HFT bot reads this Regime Score from Redis before every trade to adjust its risk parameters (e.g., widening spreads during high risk).

This architecture decouples the latency of the AI from the latency of the trade execution.

3.2 Intelligence Providers: Sentora & Nansen

The AI Agent relies on high-level "Concept Data" that is invisible to the price feed.

Sentora (formerly IntoTheBlock): DeFi Economic Risk

Sentora provides signals on the fundamental health of the DeFi ecosystem

- Risk Pulse: This is a proprietary AI-driven signal that detects anomalies in DeFi protocols. For example, it can identify a massive liquidation event starting on an Aave lending pool or a de-pegging event in a Curve stablecoin pool.

- Cross-Venue Alpha: A centralized exchange (CEX) bot can use this data for "Front-Running" the contagion. If Sentora detects a liquidation cascade on-chain (e.g., millions of ETH being liquidated on DeFi), the bot knows that this selling pressure will inevitably spill over to Coinbase and Kraken. The AI Agent sees the "High Risk" flag from Sentora and instructs the HFT bot to switch to a "Short Bias" or "Risk Off" mode before the price drop hits the CEX

Nansen: Smart Money & Token God Mode

Nansen provides "Entity Labeling," allowing the bot to track who is moving money

- Token God Mode (TGM): This endpoint provides a view of token flows broken down by entity type (e.g., Exchanges, VCs, Market Makers).

- AI Integration via MCP: Nansen utilizes the Model Context Protocol (MCP) to allow AI Agents to query its data naturally The AI Agent can execute a prompt: "Query Nansen for all tokens with a net inflow of >$2M from 'Smart Money' labels in the last hour." The output serves as a dynamic "Whitelist." The AI Agent updates the Redis Feature Store with this list, and the HFT bot automatically subscribes to these new, hot tokens via CoinAPI.

3.3 Prompt Engineering for Regime Detection

The role of the LLM is to synthesize these heterogeneous signals into a single "Market Regime."

Sample Prompt Logic:

"You are a Senior Risk Manager.

INPUTS:

- Sentora DeFi Risk Score:

{sentora_score}(Scale 0-100) - Nansen Smart Money Flow:

{nansen_flow}(Net USD) - Technical Volatility (from Redis):

{volatility_index}

TASK: Determine the current Market Regime.

OPTIONS:.

OUTPUT: JSON object with 'regime' and 'risk_multiplier'."

This structured output is parsed by the Python sidecar and injected into the execution loop.

---

Part IV: The Shield – Security Architecture

In the adversarial environment of crypto, security is not a feature; it is an existential requirement. A bot that generates 100% alpha but loses its keys is a failed project.

4.1 The Principle of Least Privilege: Read-Only Keys

The 2026 stack enforces a strict segregation of duties via API keys

- The Auditor Key (Read-Only): This key is used by the "Slow Loop" AI Agent and the Ingestion workers. It has permissions to query:ledger, query:open_orders, and query:trades. It strictly has NO trade or withdrawal permissions. If the AI server is compromised (a higher risk due to external API calls), the attacker gains nothing but information.

- The Executor Key (Trade-Only): This key is used only by the HFT bot in the "Fast Loop." It has permissions for orders:create and orders:cancel.

- The Withdrawal Key: This key does not exist on the server. Withdrawal permissions should never be enabled for an algorithmic trading key. Manual transfers should require a separate, offline-generated key or hardware wallet interaction

4.2 Network Topology: IP Whitelisting & NAT Gateways

Both Coinbase and Kraken allow users to whitelist specific IP addresses for API usage This is the strongest defense against key theft.

The Cloud Challenge:

Modern bots run on cloud functions (AWS Lambda) or auto-scaling container services (ECS/Kubernetes). These services use Dynamic IPs, meaning the bot's IP address changes every time it restarts, breaking the whitelist.

The Architecture Solution:

- Private Subnet: Deploy the trading bot containers inside a private subnet of an AWS Virtual Private Cloud (VPC).

- NAT Gateway: Route all outbound traffic from this subnet through a NAT Gateway.

- Elastic IP (EIP): Assign a static Elastic IP to the NAT Gateway

- Whitelist: Add only this single Elastic IP to the Coinbase and Kraken API settings.

This ensures that even if an API key is leaked to the public internet, it cannot be used because the request would originate from an unauthorized IP address.

Conclusion

The "2026 Algo-Trading Stack" represents the maturity of the crypto trading discipline. It rejects the simplicity of the past in favor of robust engineering.

- It wins the Data Battle by leveraging CoinAPI for atomic L3 truth while using Kaiko and CoinGecko for context.

- It masters Execution by adapting to the specific rate-limit philosophies of Coinbase (Burst) and Kraken (Decay).

- It achieves Intelligence not by asking AI to predict price, but by using Redis and Feast to feed Sentora and Nansen signals into a regime-aware logic loop.

- It survives via a Zero Trust security model rooted in IP whitelisting and key segregation.

For the Quant Developer, this stack is the baseline. The alpha comes from what you build on top of it.

Frequently Asked Questions

10 questions answered

No. L3 data (via CoinAPI) is only necessary for high-frequency strategies that depend on queue position estimation or microsecond-level arbitrage. For swing trading, rebalancing bots, or DCA strategies, L2 data (via Kaiko) or even aggregated pricing (CoinGecko) is sufficient. The key is matching your data fidelity to your strategy's time horizon.

Coinbase is more beginner-friendly due to better documentation and the official coinbase-advanced-py SDK. However, Kraken offers superior rate limits for market-making strategies and has a longer track record of uptime. Start with Coinbase for simplicity, graduate to Kraken for performance.

Not for execution. CoinGecko's latency (minutes) makes it unsuitable for triggering trades. Use it exclusively for universe selection (discovering new tokens gaining volume). For actual trade execution, you need real-time L2 or L3 data from CoinAPI or Kaiko.

Expect $500-5,000/month for professional-tier L2 data (Kaiko) and $5,000-50,000/month for enterprise L3 data (CoinAPI). Start with free/low-cost aggregated data to validate your strategy, then upgrade to paid feeds only when your backtests justify the expense.

Token Bucket (Coinbase): Allows bursts of activity—you can 'save up' unused capacity and spend it rapidly when needed. Ideal for momentum strategies that need to fire multiple orders quickly. Leaky Bucket (Kraken): Penalizes bursts—your rate limit counter decays slowly over time. Rewards patient, steady order placement. Ideal for market-making where you maintain a grid of resting orders.

Not necessarily. The 2026 stack uses AI for regime detection, not price prediction. You can integrate pre-built signals from Sentora (DeFi risk scores) and Nansen (smart money flows) without training your own models. Focus on system architecture first, add custom ML models later once you have profitable baseline strategies.

Follow these principles: (1) Read-Only Keys for data feeds (never give data providers withdrawal permissions), (2) Trading-Only Keys for exchanges (disable withdrawal via API settings), (3) IP Whitelisting (restrict API access to your VPS IP only), (4) Key Rotation (change keys every 30-90 days), (5) Multi-Key Architecture (separate keys for data, execution, and monitoring).

No. HFT requires co-location or at minimum a dedicated VPS in the same AWS region as the exchange (e.g., us-east-1 for Coinbase). Home internet latency (50-200ms) will cause you to consistently lose to co-located competitors. Budget $50-500/month for proper infrastructure.

Python dominates for rapid prototyping and strategy research (pandas, numpy, scikit-learn ecosystem). For production HFT where every millisecond counts, consider Rust or C++. The 2026 stack uses Python for the 'brain' (AI/regime detection) and Rust for the 'execution engine' (order routing).

L3 backtesting requires specialized infrastructure: (1) Store historical L3 streams in compressed Parquet format (~10TB for 1 year of BTC data), (2) Use vectorized replay libraries (like tardis-dev for crypto), (3) Simulate order book reconstruction and queue position, (4) Account for realistic fill assumptions (you don't get filled at exact BBO). Most beginners should start with L2 data and simple backtests before investing in L3 infrastructure.

Disclosure: This article contains affiliate links. We may earn a commission if you sign up through our partner links, but this doesn't influence our technical analysis. All platforms are evaluated independently following our methodology. Algorithmic trading carries significant risk including total capital loss. Past performance does not indicate future results. Never invest more than you can afford to lose. This is educational content, not financial advice. Always do your own research and consult a qualified financial advisor before making investment decisions.