1. Executive Summary: The Convergence of Algorithmic Execution and Probabilistic Intelligence

The global financial landscape is witnessing a structural paradigm shift, nowhere more visible than in the nascent yet hyper-accelerated domain of cryptocurrency markets. We have moved decisively beyond the era of manual "click-trading" and simplistic heuristic scripts. The contemporary market environment—characterized by fragmented liquidity across centralized and decentralized venues, 24/7 uptime, and volatility regimes that defy traditional asset modeling—demands a sophisticated synthesis of high-frequency data engineering, probabilistic machine learning, and robust, low-latency execution infrastructure.

This report serves as an exhaustive technical manual and research analysis on the construction, deployment, and management of AI-driven crypto trading systems. Unlike traditional equity markets, where automation has long been the province of institutional high-frequency trading (HFT) firms, the crypto market offers a unique democratization of algorithmic alpha. Tools that were once proprietary are now accessible via open-source frameworks; data that cost millions is available via public APIs; and execution capabilities are exposed directly to retail endpoints. However, this accessibility breeds competition. The edge in 2026 lies not in access, but in architecture.

2. Theoretical Foundations of Algorithmic Trading

Before examining the software architecture, one must understand the theoretical underpinnings that justify the use of Artificial Intelligence in financial markets. The fundamental hypothesis driving AI adoption is the rejection of the Strong Form Efficient Market Hypothesis (EMH) in the context of cryptocurrencies. While EMH suggests that asset prices reflect all available information, rendering alpha generation impossible, empirical evidence in crypto markets suggests significant inefficiencies. These arise from:

- Information Asymmetry: News propagates across the fragmented crypto ecosystem at varying speeds.

- Behavioral Biases: The retail-heavy nature of crypto exacerbates patterns driven by Fear of Missing Out (FOMO) and panic selling, creating predictable volatility clusters.

- Market Microstructure Friction: Differences in liquidity depth and latency between exchanges create transient arbitrage opportunities.

Traditional algorithmic trading relies on deterministic logic: "If the 50-day Moving Average crosses the 200-day Moving Average, buy." This approach, while easy to implement, is brittle. It assumes that historical correlations are static. AI-driven trading, conversely, is probabilistic. It does not seek a hard rule but rather estimates the conditional probability of price movement given a high-dimensional state space. An AI agent might learn that the Moving Average crossover is a valid signal only when on-chain whale inflows are low and social sentiment is neutral. This ability to capture non-linear interactions between disparate data points is the primary advantage of the machine learning approach.

2.1 The Shift from Heuristic to Stochastic Models

The evolution of trading bots can be categorized into three generations:

- Generation 1 (Heuristic): Hard-coded rules based on technical indicators (RSI, MACD). These are prone to "curve-fitting" where parameters are over-optimized for past data but fail in live markets.

- Generation 2 (Statistical/Quantitative): Models based on cointegration (pairs trading) and statistical arbitrage. These are robust but often require specific market conditions (e.g., high correlation between assets) to function.

- Generation 3 (AI/Agentic): Systems using Neural Networks and Reinforcement Learning to discover features and strategies autonomously. These systems are characterized by their ability to "generalize"—applying learned logic to unseen data.

3. Foundational System Architecture: The Anatomy of an AI Trading Agent

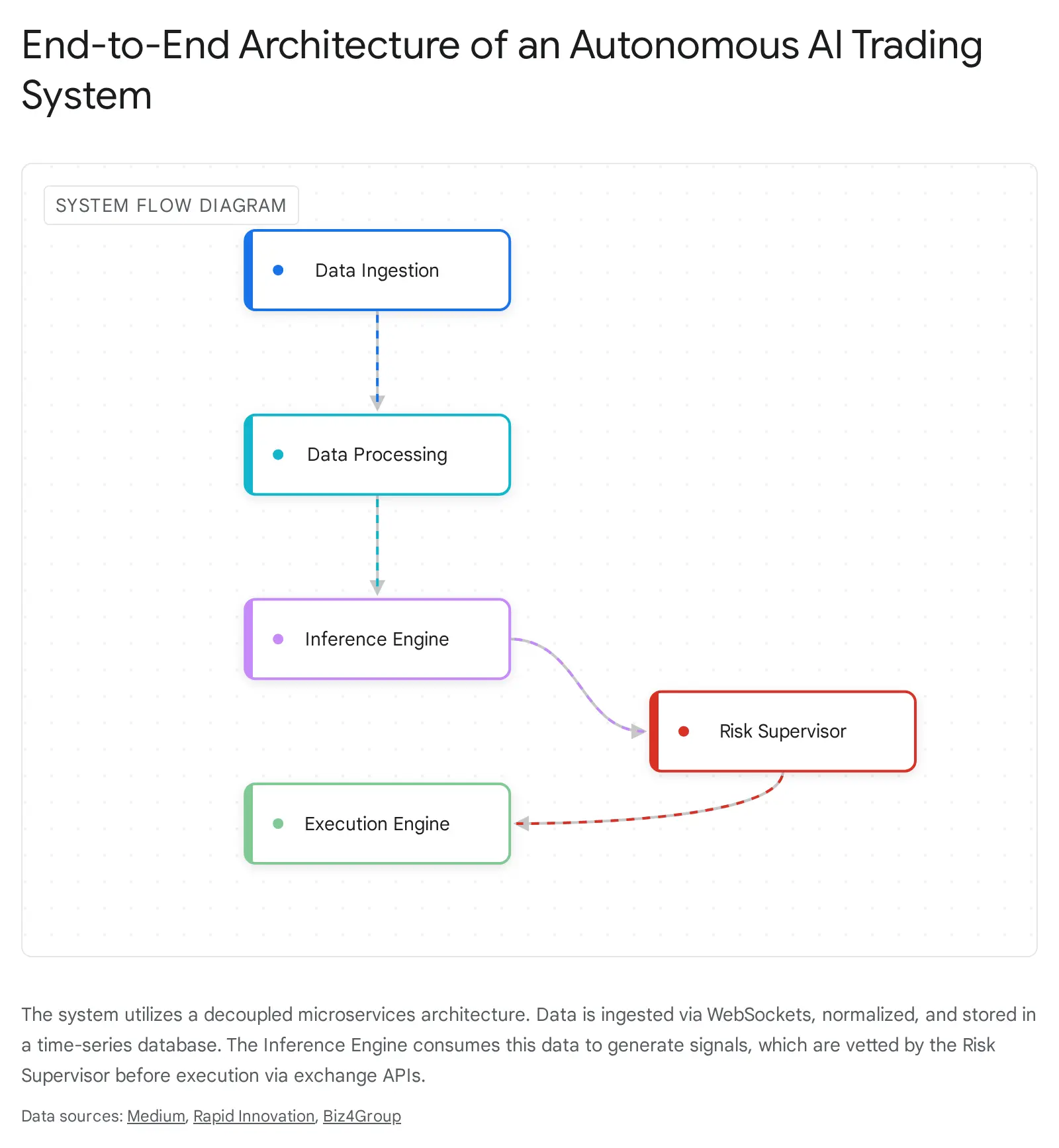

A robust AI trading bot is not a monolithic script but a distributed system composed of distinct, decoupled microservices. The "monolithic" approach—where data fetching, decision logic, and execution happen in a single loop—is a recipe for latency and failure. Professional architecture decouples these processes to ensure fault tolerance, scalability, and minimal latency. We categorize the architecture into four primary layers: the Data Ingestion Layer, the Strategy & Inference Engine, the Execution Layer, and the Risk Management Supervisor.

graph TD

A[Data Ingestion Layer] -->|Normalized Stream| B(Pub/Sub Message Queue)

B --> C[Strategy Engine / Inference]

B --> D[Risk Supervisor]

C -->|Signal| D

D -->|Validated Order| E[Execution Layer]

E -->|REST/FIX| F[Exchange API]

3.1 The Data Ingestion Layer

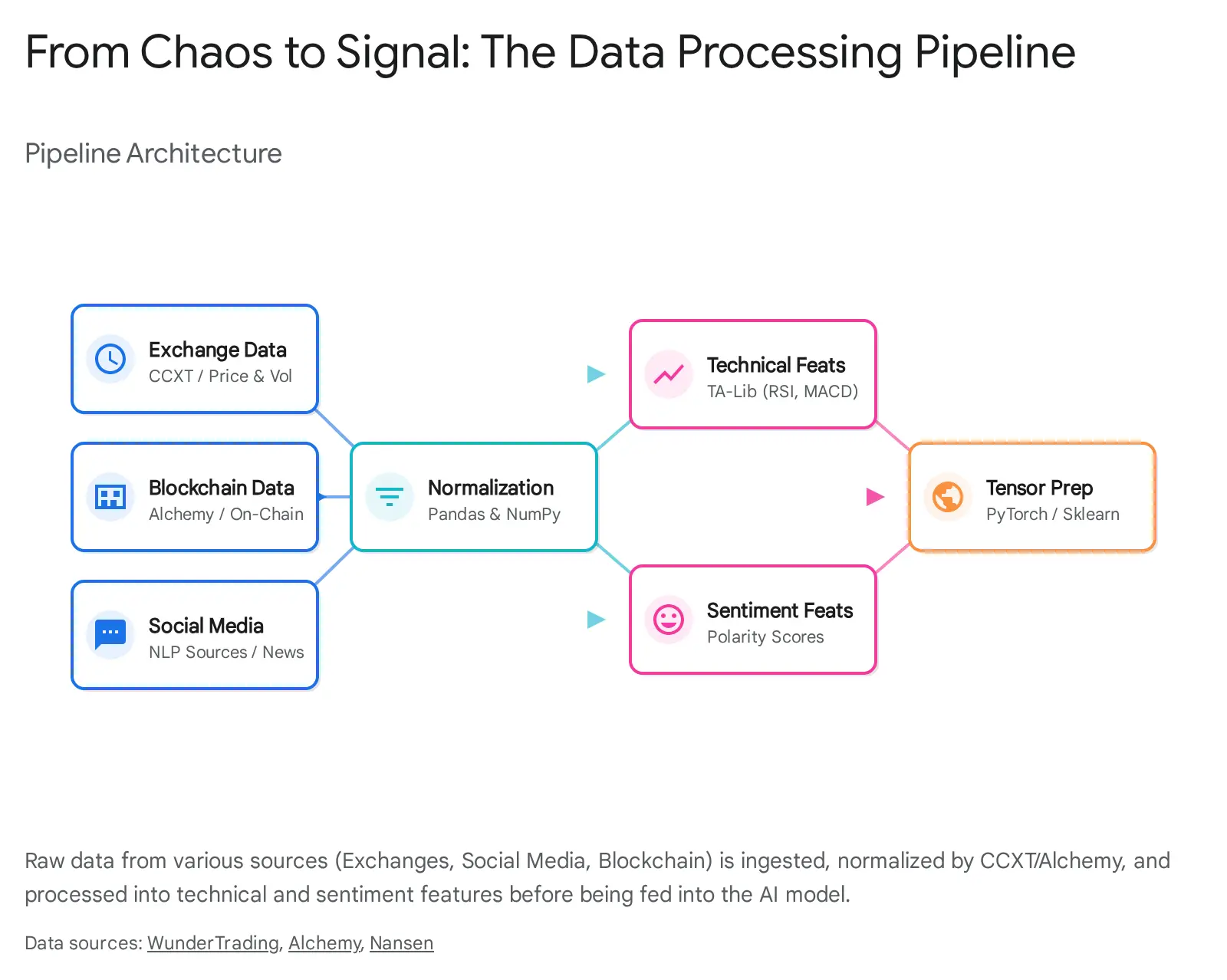

The lifeblood of any AI system is data. In crypto markets, this data is heterogeneous, arriving at varying frequencies and in differing formats. The Ingestion Layer is responsible for normalizing this chaos into a coherent stream.

- Market Data: This includes trade ticks (individual transactions), Order Book updates (Level 2 and Level 3 data), and OHLCV (Open, High, Low, Close, Volume) aggregates. High-frequency strategies require tick-level data to model market impact, while trend-following strategies may only need 1-hour candles.

- On-Chain Data: This provides a view into the "supply chain" of the asset. Metrics include transaction volumes, "whale" wallet movements (large holders moving funds to exchanges, often a bearish signal), and gas prices. Tools like Alchemy or Infura are essential gateways here.

- Alternative Data: This uncovers the "psychological" state of the market. It encompasses social sentiment (Twitter/X, Reddit), news headlines, and even developer activity on GitHub repositories.

The ingestion layer must handle WebSockets for real-time streaming (e.g., Binance wss://stream.binance.com:9443) and REST APIs for historical backfilling and account snapshots. A critical design pattern here is the Publisher-Subscriber (Pub/Sub) model. The ingestion service connects to exchanges, normalizes the data into a unified format (handling exchange-specific idiosyncrasies via libraries like CCXT), and publishes it to a message queue (e.g., Redis, Kafka) or a time-series database (e.g., InfluxDB, TimescaleDB). This decoupling ensures that a temporary lag in the strategy engine does not block the data fetching process, preventing packet loss/data gaps during high-volatility events which are exactly when the data is most critical.

3.2 The Strategy & Inference Engine

This is the "brain" of the bot. It subscribes to the normalized data streams and processes them through feature engineering pipelines.

- Feature Engineering: Raw prices are rarely useful to a neural network because they are non-stationary (the statistical properties change over time). The engine transforms raw data into stationary features. This involves calculating technical indicators (RSI, MACD, Bollinger Bands) using optimized libraries like TA-Lib, as well as normalizing data (e.g., log-returns, z-scores) to ensure model convergence.

- Model Inference: The pre-processed features are fed into trained models (e.g., an LSTM for price prediction or a BERT model for sentiment analysis). The output is typically a probability distribution over potential actions (Buy, Sell, Hold) or a predicted scalar value (next price). In advanced systems, this inference happens asynchronously to prevent blocking the execution loop.

3.3 The Execution Layer

Once a signal is generated, it must be translated into an order. The execution layer is the interface with the external world and is responsible for the mechanics of the trade.

- Order Routing: Selecting the appropriate exchange or liquidity pool. In a fragmented market, the same asset may trade at slightly different prices on Binance vs. Kraken. Smart Order Routers (SOR) scan for the best execution venue.

- Order Slicing: Breaking large orders into smaller "child" orders to minimize slippage (Iceberg orders). If a bot tries to buy 100 BTC at once, it will chew through the order book and drive the price up against itself. Slicing disguises the intent.

- Idempotency: Ensuring that a network retry does not result in a duplicate order. This is a critical safety feature. If the bot sends a "Buy" request and doesn't receive a confirmation due to a timeout, it might retry. Without idempotency tokens (unique IDs for each order), the exchange might execute the trade twice.

- API Management: Handling nonces, signing requests with HMAC SHA256, and managing rate limits to avoid IP bans is the bread and butter of this layer.

Affiliate link. See our methodology.

3.4 The Risk Management Supervisor

This layer acts as a "circuit breaker" or "governor" that sits logically between the Strategy Engine and the Execution Layer. It validates every trade against pre-defined safety rules irrespective of the AI's confidence. The Risk Supervisor has veto power.

- Portfolio Checks: "Do we have enough USDT?" "Is the portfolio exposure to BTC already at the maximum defined limit (e.g., 20%)?"

- Market Conditions: "Is the spread too wide?" "Is volatility currently exceeding the safety threshold?" "Is the order book too thin (low liquidity)?"

- Sanity Checks: "Is the AI predicting a 50% price jump in 1 minute?" (likely a hallucination or data error). "Is the order size larger than the total account balance?"

4. Data Infrastructure: The Foundation of Algorithmic Intelligence

The efficacy of any AI model is strictly bounded by the quality of its input data. "Garbage in, garbage out" is the cardinal rule of machine learning. In the realm of cryptocurrency, data engineering presents unique challenges due to the fragmented nature of liquidity, the lack of standardization across exchanges, and the sheer volume of noise.

4.1 Unified Connectivity via CCXT

The ccxt (CryptoCurrency eXchange Trading) library has established itself as the de facto standard for connecting to cryptocurrency exchanges in Python, JavaScript, and PHP. It acts as a middleware that abstracts the complexities of individual exchange APIs, providing a unified interface for fetching market data and executing orders.

Normalization Challenge:

Binance might return a ticker with the key lastPrice, while Kraken uses c, and Coinbase uses price. A raw implementation would require custom parsers for every exchange. CCXT normalizes these raw JSON responses into a standard dictionary structure. A call to exchange.fetch_ticker('BTC/USDT') will return a standardized dictionary containing keys like 'symbol', 'timestamp', 'high', 'low', 'bid', 'ask', 'last', etc., regardless of the underlying exchange.

Rate Limiting Algorithms:

Exchanges impose strict rate limits (e.g., 1200 requests per minute). Exceeding these results in an IP ban, which is catastrophic for a live bot. CCXT implements a "token bucket" algorithm for rate limiting. When initializing the exchange class, setting 'enableRateLimit': True ensures the library automatically sleeps the thread if requests are being sent too fast. This handles the pacing logic so the developer doesn't have to manually track timestamps of every request.

Handling Historical Data Pagination:

Fetching historical OHLCV (candlestick) data is rarely a single API call. Most exchanges limit the response to 500 or 1000 candles. To build a dataset for training a Deep Learning model (which might require years of minute-level data), one must implement a pagination loop.

The standard pattern involves using the since parameter:

- Define a

start_time(e.g., timestamp for Jan 1, 2020). - Request candles starting from

start_timewithlimit=1000. - Process the received data.

- Update

start_timeto the timestamp of the last received candle + 1 timeframe (e.g., + 1 minute). - Repeat until

start_timeis greater thancurrent_time.

4.2 Real-Time Data Streaming with WebSockets

While REST APIs are sufficient for lower-frequency strategies (e.g., daily rebalancing), high-frequency and market-making bots require WebSockets. REST polling introduces latency equal to the polling interval (e.g., 1 second) plus the round-trip time (RTT). In a fast-moving market, a 1-second delay means the price on your screen is "stale" and no longer actionable.

WebSockets provide a persistent, full-duplex communication channel. The exchange pushes updates to the bot the instant a trade occurs or the order book changes.

- Subscription Management: The bot sends a JSON payload to subscribe to specific topics (e.g.,

trade@BTCUSDT,depth@BTCUSDT). - Heartbeats: Connections can drop silently. The bot must implement "ping-pong" frames or monitor for a "heartbeat" message to ensure the connection is alive. If silence is detected for longer than a threshold (e.g., 30 seconds), the bot must automatically reconnect and resubscribe.

- Order Book Reconstruction: A common pattern is to fetch a REST snapshot of the order book (to get the initial state) and then apply WebSocket "diffs" or "deltas" (updates) to keep it current. This allows the bot to maintain a local, real-time copy of the exchange's order book without the bandwidth overhead of downloading the full book every 100ms.

4.3 On-Chain and Sentiment Data Integration

Pure price action is often a lagging indicator—it reflects what has already happened. AI models gain a significant edge by incorporating leading indicators from the blockchain and the social sphere.

On-Chain Metrics: The transparency of public blockchains allows for the monitoring of capital flows before they hit exchange order books. Tools like Alchemy allow bots to monitor the mempool (pending transactions) or specific smart contract events.

- Exchange Inflow: A sudden, large transfer of BTC or ETH from a private wallet to a known exchange wallet often signals an intent to sell. This "exchange inflow" metric can be a powerful bearish feature for an ML model.

- Gas Prices: A spike in gas prices on Ethereum indicates network congestion, often correlated with high-demand NFT mints or panic selling/buying in DeFi.

Sentiment Analysis Pipeline: NLP models process unstructured text data to gauge market psychology. For 2026, using RoBERTa or FinBERT models is standard practice. The workflow involves:

- Ingestion: Pulling tweets or headlines via APIs (e.g., X API, NewsAPI, Cryptopanic).

- Preprocessing: Cleaning the text (removing URLs, normalizing "bullish" to "bull").

- Tokenization: Converting text into numerical tokens. FinBERT uses a specific vocabulary optimized for financial contexts (e.g., it understands that "liability" is negative, whereas a generic model might view it neutrally).

- Inference: The model outputs a probability distribution (Positive, Negative, Neutral).

- Aggregation: A single tweet is noisy. The system calculates a rolling average "Sentiment Score" (e.g., over the last hour) to smooth out the noise. This score becomes a numerical feature (e.g., ranging from -1 to +1) fed into the trading model.

5. Artificial Intelligence & Machine Learning Models

The core differentiator of an AI trading bot is its ability to learn non-linear patterns and adapt to changing market regimes. While simple regression models can forecast trends, they fail to capture the complex temporal dependencies of crypto markets. We focus on three primary classes of models: Time-Series Forecasting (LSTM/Transformers), Sentiment Analysis (NLP), and Reinforcement Learning (RL).

5.1 Deep Learning for Time-Series: LSTM and GRU

Long Short-Term Memory (LSTM) networks are a specialized type of Recurrent Neural Network (RNN) designed to overcome the "vanishing gradient" problem, which prevents standard RNNs from learning long-term dependencies. In financial time series, an event that happened 50 periods ago (e.g., a sudden volatility spike) might be relevant to the current prediction.

Architecture Mechanics: Unlike a standard feedforward network where data flows one way, LSTMs have internal "states" or memory. The flow of information is controlled by three gates:

- Forget Gate: Decides what information from the previous cell state to discard (e.g., "forget the previous trend direction if a major reversal pattern is detected").

- Input Gate: Decides what new information to store in the cell state.

- Output Gate: Decides what the next hidden state should be, based on the cell state.

Implementation in Trading: In a crypto context, LSTMs are typically fed a 3D tensor of shape (Batch_Size, Time_Steps, Features).

Time_Steps: The "lookback" window, e.g., the last 60 minutes of data.Features: The variables at each time step (Close Price, Volume, RSI, Sentiment Score). The model learns to map this sequence to a target, such as the price at t+1 (regression) or the direction of the next move (classification).

Enhancements:

- Bidirectional LSTMs: These process the data sequence in both forward and backward directions. This allows the network to understand the context of a price point based on both past and "future" (in the training context) data, leading to better feature representation.

- Attention Mechanisms: Integrating attention layers allows the model to assign different "weights" to different time steps. It might learn that the candle with the highest volume in the sequence is the most critical predictor, regardless of where it occurred in the timeline.

5.2 Reinforcement Learning (RL): The Adaptive Agent

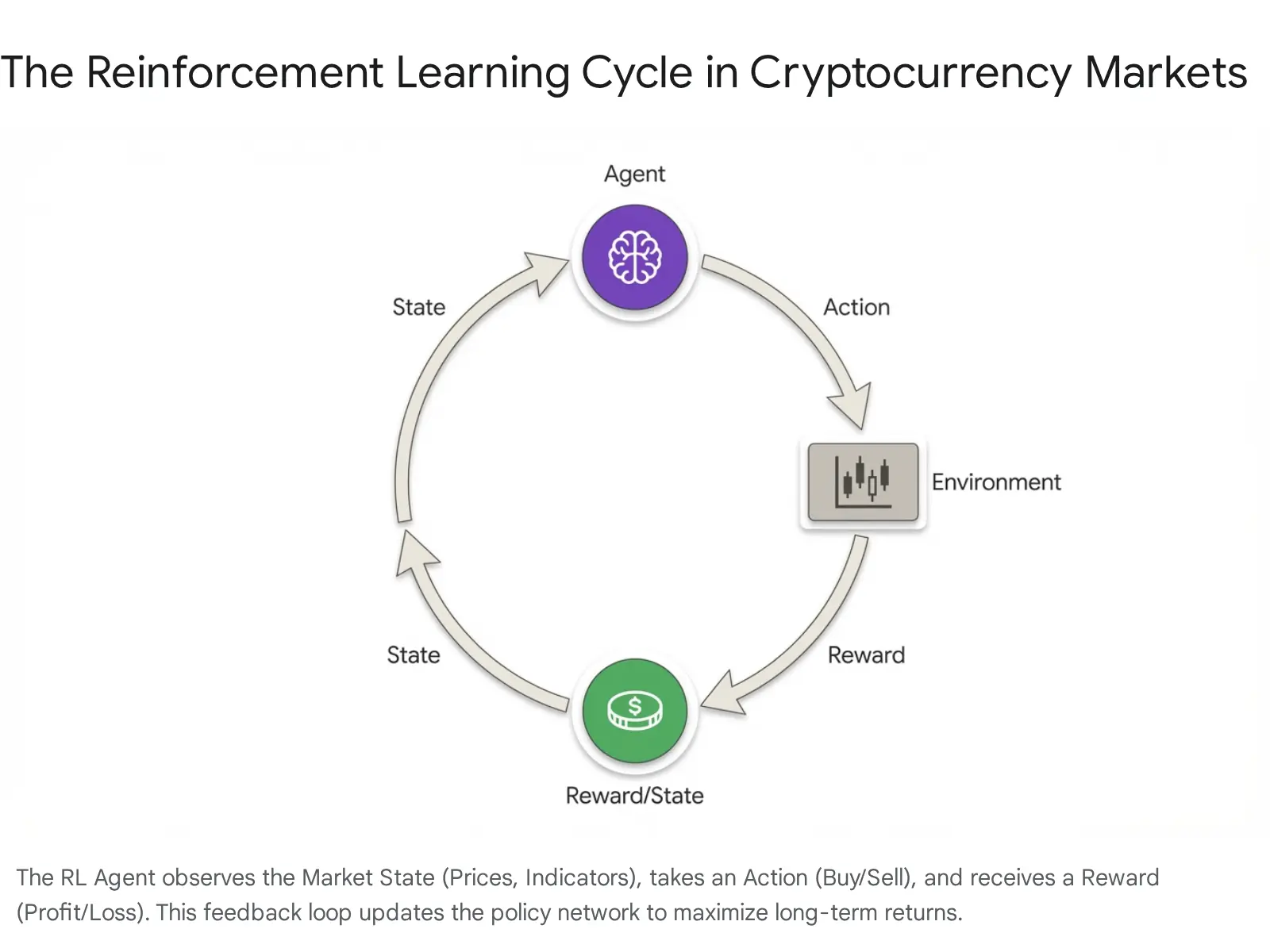

Reinforcement Learning represents the frontier of automated trading. Unlike supervised learning, which requires labelled datasets (e.g., "at this point, the correct price was X"), RL learns by doing. It optimizes a policy through trial and error to maximize a cumulative reward.

The MDP Formulation: Trading is modeled as a Markov Decision Process (MDP):

- Agent: The trading bot.

- Environment: The market (Order Book, Account Balance, Position).

- State (

S_t): A snapshot of the environment at time t. This is the input vector (Prices, Indicators, Balance). - Action (

A_t): The decision taken (Buy, Sell, Hold, Close). - Reward (

R_t): The feedback signal. This is critical. A naive reward is simply "Profit/Loss". However, this encourages reckless gambling. A better reward function is the Sharpe Ratio or Sortino Ratio, which penalizes volatility and downside risk.R_t = Return - Lambda × Risk.

Algorithms:

- Deep Q-Networks (DQN): The agent learns a Q-function that estimates the expected future reward of taking action A in state S.

- Proximal Policy Optimization (PPO): A policy gradient method that is more stable and sample-efficient than DQN. It limits how much the policy can change in a single update, preventing the agent from learning "wild" behaviors that lead to catastrophic ruin.

Advantages: RL agents can discover complex, multi-step strategies that are hard to hard-code. For example, an RL agent might learn to "scale in" to a position (buying small amounts as price drops) or to hold a losing position if the market microstructure suggests a liquidity hunt (stop run) is occurring, rather than simply hitting a hard stop-loss.

Backtesting Frameworks

Don't write your own backtester. Use VectorBT for rapid grid search (vectorized) or Freqtrade for a complete, production-ready environment.

6. Strategy Development: From Signal to Logic

While the AI model provides a signal (prediction), the Strategy Logic defines how that signal is used. A raw prediction of "Price Up" is not a trade; the strategy must define entry, exit, and sizing rules.

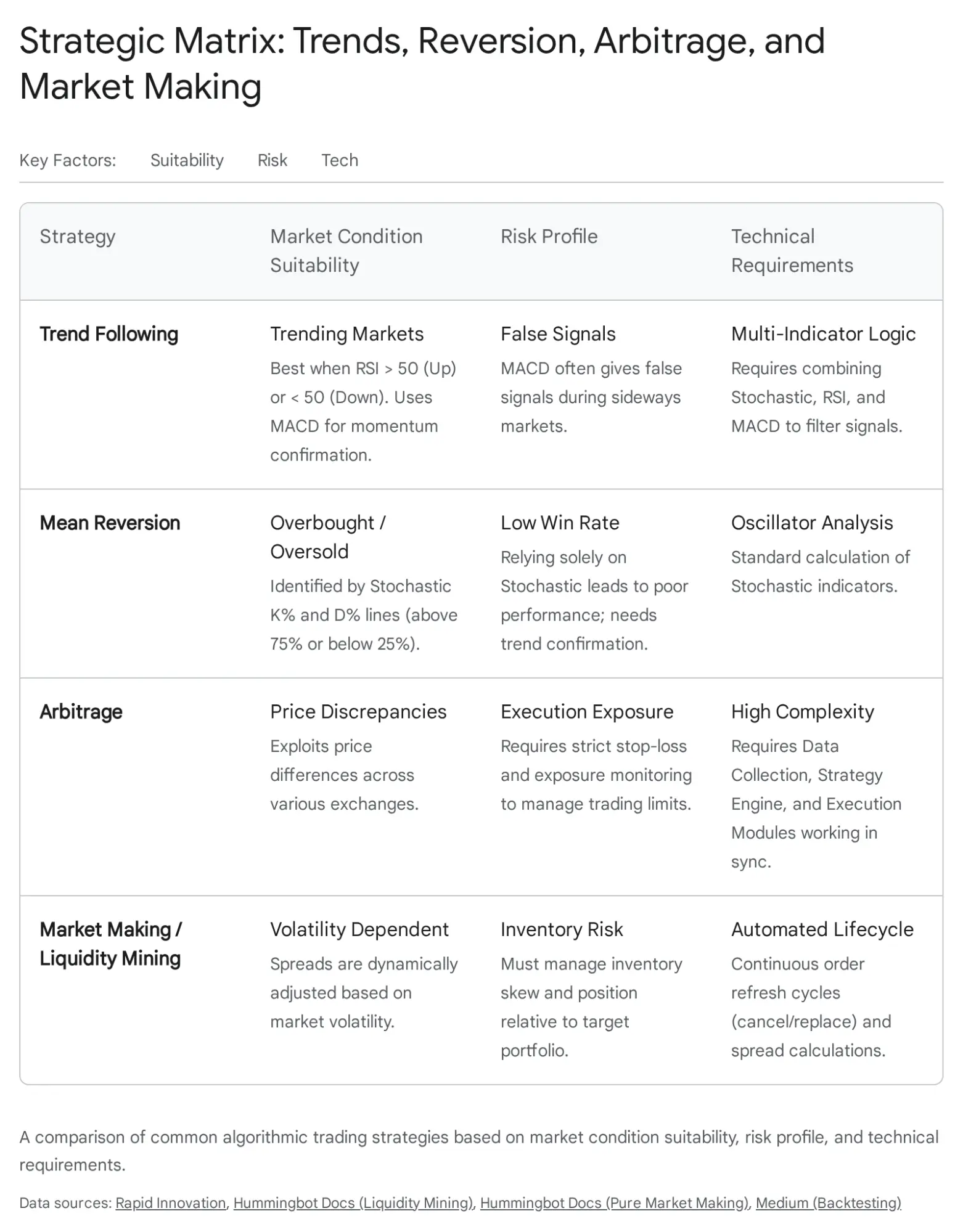

6.1 Trend Following and Mean Reversion

- Trend Following: The assumption is that assets in motion stay in motion. An LSTM might be trained to detect the onset of a trend by identifying specific volume-price divergence patterns. The strategy enters long when the model predicts a sustained rise and exits when the trend strength (ADX) weakens.

- Mean Reversion: The assumption is that price oscillates around a central value. Strategies use indicators like RSI or Bollinger Bands. A common bot logic: "Short when Price > Upper Bollinger Band AND RSI > 70". VectorBT and Pandas-TA are excellent for backtesting these indicator-based logics.

6.2 Arbitrage and Market Making

These strategies rely less on predicting direction and more on market inefficiencies.

- Arbitrage: Exploiting price differences for the same asset across exchanges (e.g., BTC is $100 cheaper on Kraken than Binance). This requires monitoring the "spread" and executing simultaneous Buy/Sell orders. Speed is paramount; Python is often too slow for the execution leg of HFT arbitrage, leading developers to write the execution logic in C++ or Rust.

- Market Making: Providing liquidity by placing limit orders on both sides of the book (Bid and Ask) to capture the spread. Tools like Hummingbot dominate this space. In 2026, "Liquidity Mining" strategies optimize these bots to not just earn the spread but also to capture token rewards offered by DEXs for providing liquidity.

6.3 Backtesting Frameworks: VectorBT vs. Backtrader vs. Freqtrade

Choosing the right simulation environment is critical to avoid wasting time.

- VectorBT: Uses "vectorization" (NumPy/Pandas operations) to simulate millions of trades instantly. It calculates the strategy outcome for the entire dataset in one go. Ideally suited for "Grid Search" optimization (finding the best parameters for an RSI strategy). Downside: Hard to model complex, path-dependent logic (e.g., "if I bought yesterday, move my stop loss today based on today's volatility").

- Backtrader: Event-driven. It loops through data one candle at a time, mimicking a live broker. Excellent for complex logic and visualizing trades. Downside: Significantly slower than VectorBT.

- Freqtrade: A complete bot platform that includes backtesting, live trading, and plotting. It uses a DataFrame approach similar to VectorBT but includes robust "Batteries Included" features like Telegram integration, multiple exchange support, and Docker images. It is the recommended starting point for most Python-based bot developers in 2026.

7. Risk Management: The Math of Survival

In the high-variance domain of crypto, risk management is mathematically more important than signal accuracy. A strategy with a 90% win rate can still bankrupt a portfolio if the 10% losses are catastrophic ("Gambler's Ruin"). The Risk Supervisor module is the defense against this.

7.1 Position Sizing Algorithms

Arbitrary sizing (e.g., "put $1000 on every trade") is suboptimal. Advanced bots use dynamic sizing.

- Kelly Criterion: A formula to calculate the optimal bet size to maximize logarithm of wealth.

f* = (bp - q) / bWheref*is the fraction of the bankroll to wager,bis the net odds received on the wager (payout),pis the probability of winning, andqis the probability of losing (1-p).- Crypto Nuance: Full Kelly is incredibly volatile and can lead to 90% drawdowns. Practitioners typically use "Half-Kelly" or "Quarter-Kelly" to dampen volatility while still growing the pot.

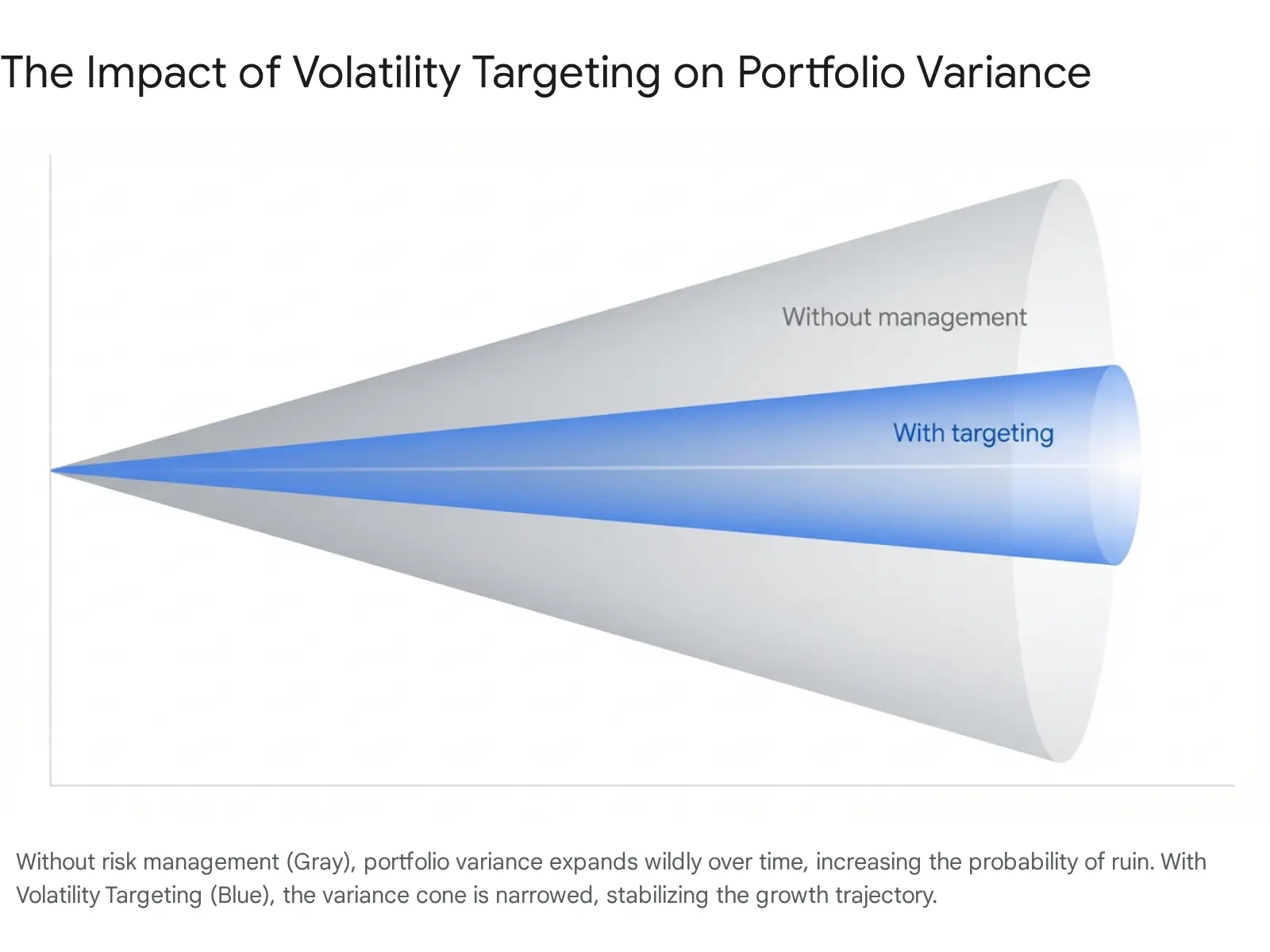

- Volatility Targeting: This method adjusts size based on the asset's current volatility (measured by Average True Range - ATR).

Position Size = (Account Value × Risk %) / ATRIf Bitcoin's volatility doubles, the bot automatically halves the position size. This ensures that the dollar risk remains constant regardless of whether the market is calm or chaotic.

7.2 Stop-Loss Mechanics

- Trailing Stop: A fixed stop loss is static. A trailing stop moves up as the price moves in your favor, locking in profits. The "Chandelier Exit" is a popular algorithm: it places the stop at Highest High of last N periods - (Multiplier * ATR). This allows the trade to breathe during normal volatility but exits immediately if the trend breaks.

- Time-Based Exits: If a trade has not become profitable within a certain timeframe (e.g., 6 hours), the "thesis" is likely wrong. Closing the trade frees up capital ("Opportunity Cost management") and reduces exposure to overnight risks.

7.3 Circuit Breakers

Automated "kill switches" protect against "unknown unknowns" (e.g., a flash crash or an API bug).

- Drawdown Limit: "If Equity < 85% of Start Equity, HALT TRADING."

- Consecutive Loss Limit: "If 5 consecutive losses occur, PAUSE and send alert."

- API Error Rate: If the exchange API starts returning 5xx errors (server overload), the bot should enter a "safe mode" and stop sending new orders to prevent order duplication or stuck states.

Affiliate link. See our methodology.

8. Infrastructure, Deployment, and Security

A trading bot running on a home laptop is a hobby project. A professional deployment requires robust cloud infrastructure to ensure 24/7 uptime, low latency, and security.

8.1 Dockerization

Containerizing the bot using Docker is industry standard. It packages the code, the specific Python version, and all dependencies (pandas, tensorflow, ccxt) into a single "image". This eliminates the "it works on my machine" problem. A Dockerfile defines the environment, and docker-compose can be used to orchestrate the bot alongside its database (e.g., Redis for caching).

8.2 Cloud Hosting (VPS)

Reliability is key.

- Virtual Private Servers (VPS): AWS EC2, DigitalOcean Droplets, or Vultr.

- Latency Arbitration: For arbitrage bots, the server should be geographically located in the same data center region as the exchange's matching engine (e.g., AWS Tokyo for Binance). This minimizes network latency.

- Process Management: Tools like systemd (Linux) or PM2 (Node.js) ensure that if the bot process crashes, it is automatically restarted immediately.

API Key Security

NEVER hardcode keys in source code. Use Environment Variables .env. On the exchange, disable "Withdrawal" permissions for all trading keys.

8.3 Security Best Practices

Trading bots hold the keys to the bank. Security cannot be an afterthought.

-

API Key Management: NEVER hardcode API keys in the source code. If that code is pushed to GitHub, the funds will be stolen in seconds by scraper bots. Use Environment Variables. Create a .env file (added to .gitignore) and load keys using the python-dotenv library.

import os from dotenv import load_dotenv load_dotenv() api_key = os.getenv("BINANCE_API_KEY") -

Least Privilege: When creating API keys on the exchange, enable "Trade" permissions but DISABLE "Withdrawal" permissions. This ensures that even if a hacker steals the keys, they cannot transfer the funds out.

-

Encryption: Use encrypted secrets managers (like AWS Secrets Manager or HashiCorp Vault) for enterprise-grade deployments.

9. Tool Recommendations and 2026 Ecosystem

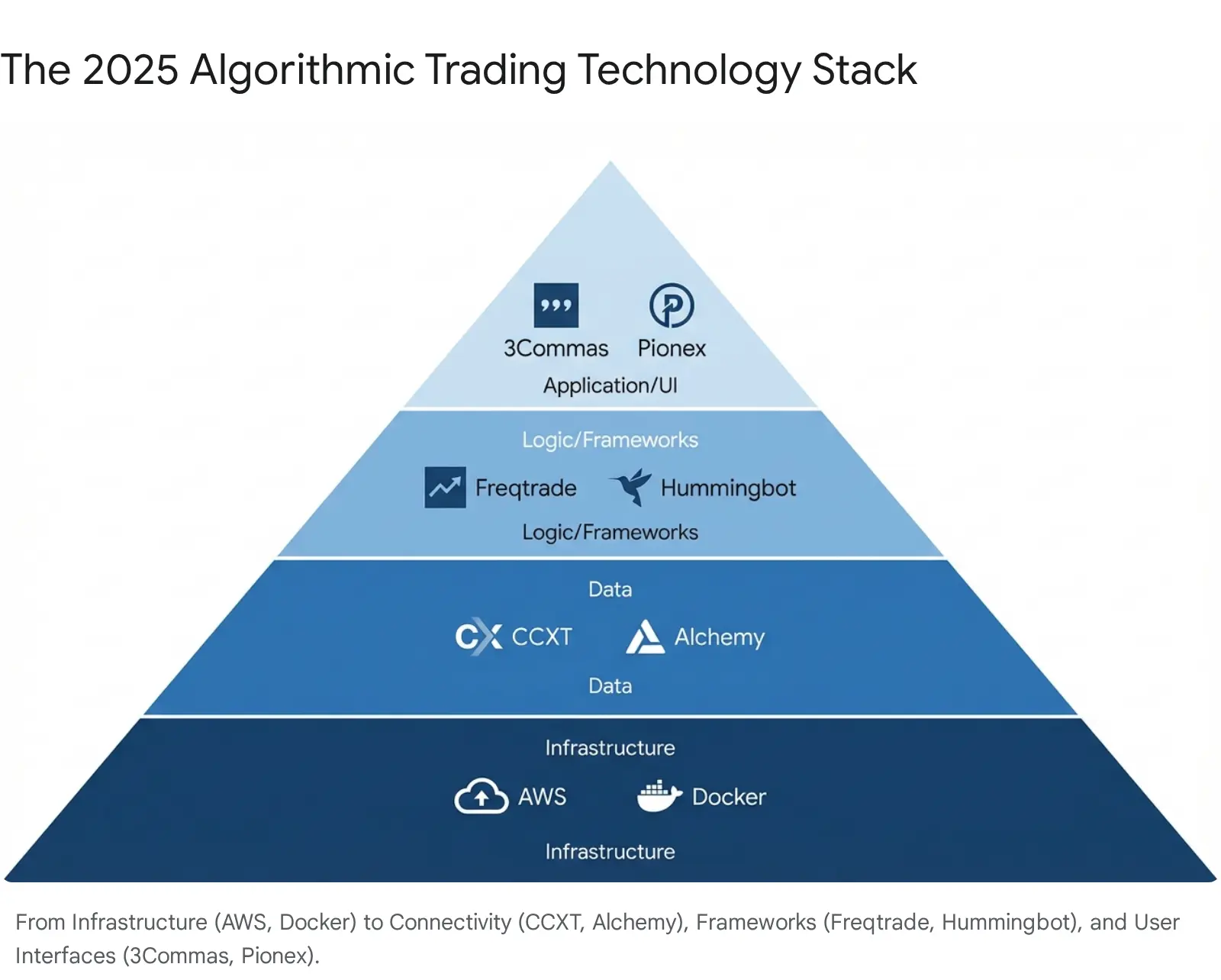

The ecosystem has matured significantly. The choice of tool depends on the user's technical proficiency and goal.

9.1 No-Code/Low-Code Platforms (Retail)

- 3Commas: Known for its "Smart Trade" terminal and DCA (Dollar Cost Averaging) bots. Good for managing manual trades and simple automations.

- Cryptohopper: Offers a drag-and-drop strategy designer and a marketplace for buying strategies. Cloud-based, so no installation is required.

- Pionex: An exchange that integrates free trading bots (Grid, DCA) directly into the platform. This removes the need for API keys entirely, making it the safest and easiest option for absolute beginners.

9.2 Developer Frameworks (Pro/Eng)

- Freqtrade: The gold standard for Python developers. Open-source, supports backtesting, hyperparameter optimization (Hyperopt), and has a large active community. It handles the "plumbing" (exchange connection, error handling) so you can focus on the strategy logic.

- Hummingbot: Specialized for market making and arbitrage strategies. It has a modular architecture with "connectors" for various centralized (Binance, Coinbase) and decentralized (Uniswap, dYdX) exchanges.

9.3 Data & Infrastructure

- CCXT: The essential library for raw connectivity.

- CoinGecko / CoinMarketCap API: For global market data (market cap, dominance).

- Alchemy / Infura: For direct blockchain data access (essential for on-chain analysis).

Affiliate link. See our methodology.

10. Regulatory Landscape and Future Outlook

The "Wild West" era of crypto is concluding. In 2026, regulatory compliance is a technical requirement, not just a legal one.

MiCA Compliance

The EU's Markets in Crypto-Assets regulation impacts algorithmic trading. Ensure your strategies do not inadvertently trigger market abuse flags (wash trading, spoofing).

10.1 Regulatory Compliance

- MiCA (EU): The Markets in Crypto-Assets regulation is now fully effective. It sets strict standards for transparency and operation. Bot operators managing third-party funds or offering "signals" as a service may need to register as crypto-asset service providers (CASPs). Algorithmic trading firms must have systems to prevent market abuse (wash trading, spoofing).

- KYC/AML: Exchanges are enforcing mandatory KYC. Bots must be robust enough to handle "frozen" account states if compliance checks are triggered unexpectedly.

- Taxation: Every trade is a taxable event in many jurisdictions. Bots should ideally export trade logs in a format compatible with crypto tax software (e.g., Koinly, CoinLedger) to automate the reporting burden.

10.2 The Future: Agentic AI

The next generation of bots are not just "traders" but "agents." Leveraging Large Language Models (LLMs), these agents can perform cognitive tasks: reading whitepapers to evaluate new tokens, parsing Federal Reserve meeting minutes to gauge macro sentiment, and even coordinating with other agents in a swarm intelligence network to execute complex strategies.

11. Conclusion

Automating crypto trading is a multidisciplinary engineering challenge that blends financial theory, software architecture, and artificial intelligence. While tools like Freqtrade and CCXT lower the barrier to entry, the edge comes from the sophisticated application of AI models (LSTMs, RL) and rigorous, mathematically sound risk management. As the market matures and regulation tightens, the successful trader of 2026 will be less of a speculator and more of a systematic risk manager, overseeing a fleet of autonomous agents that execute with the precision of machines and the adaptability of neural networks.

Further Reading

- Tools Overview: AI Crypto Trading Tools 2026

- Beginner Tutorial: Build Your First AI Bot

Frequently Asked Questions

3 questions answered

Financial data is non-linear and time-dependent. LSTMs can capture temporal patterns and 'memories' of market behavior that simple regression misses.

While Python is dominant for research and strategy logic, the execution layer of true HFT systems is often written in C++ or Rust for microsecond latency.

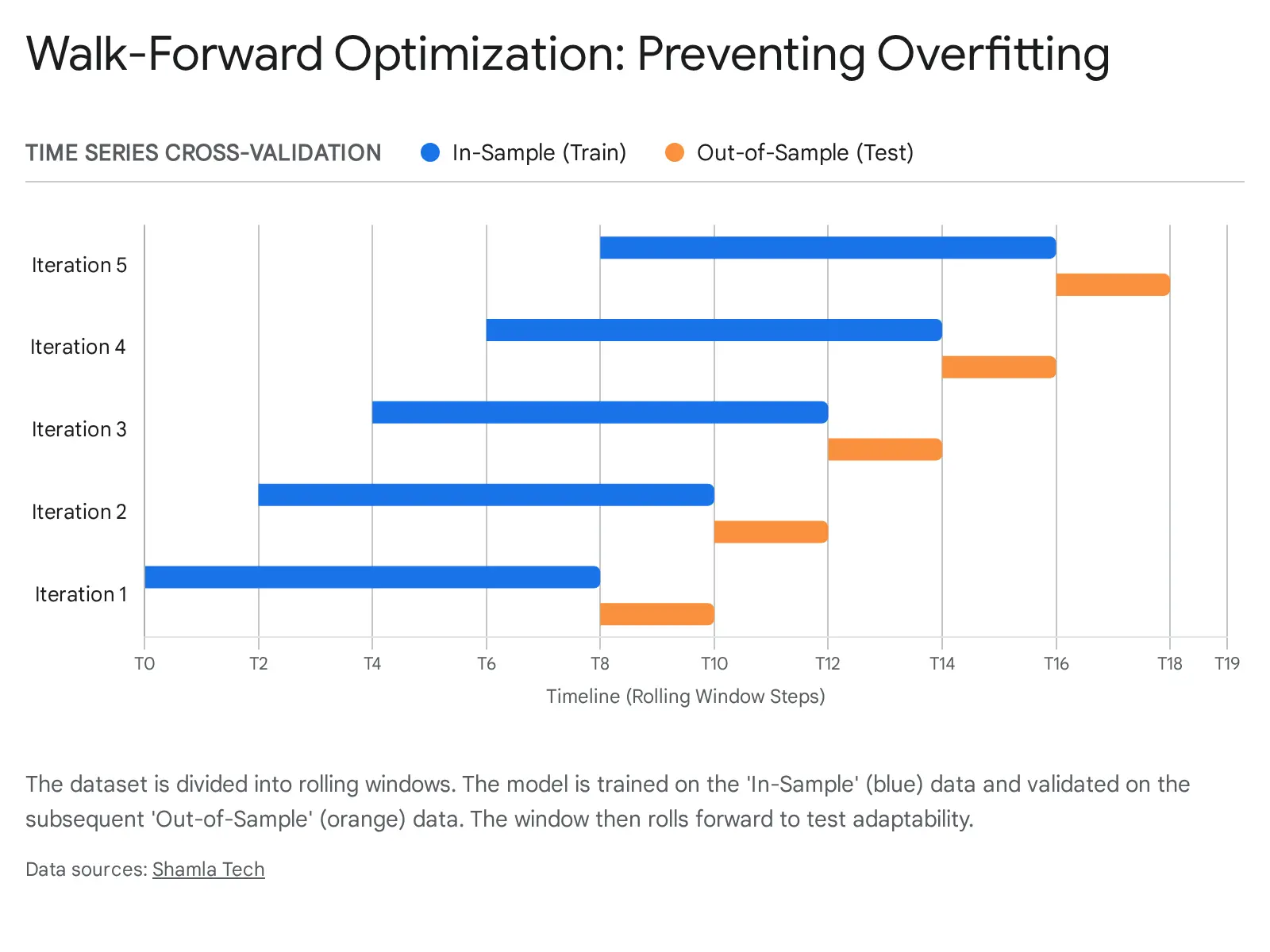

Use 'Walk-Forward Optimization' instead of simple cross-validation. Split data into training, validation, and out-of-sample testing periods that move forward in time.